Have you already used trees or random forests to model a relationship of a response and some covariates? Then you might like the condtional trees, which are implemented in the party package.

In difference to the CART (Classification and Regression Trees) algorithm, the conditional trees algorithm uses statistical hypothesis tests to determine the next split. Every variable is tested at each splitting step, if it has an association with the response. The variable with the lowest p-value is taken for the next split. This is done until the global null-hypothesis of independence of the response and all covariates can not be rejected.

Conditional trees is my subject in a university seminar this semester. Here are my slides explaining the functionality of conditional trees, which I wanted to share with you. It includes the theory and two short examples in R.

Subscribe to:

Post Comments (Atom)

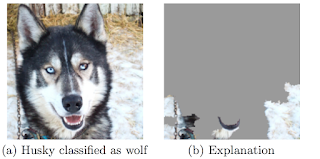

Explaining the decisions of machine learning algorithms

Being both statistician and machine learning practitioner, I have always been interested in combining the predictive power of (black box) ma...

-

Being both statistician and machine learning practitioner, I have always been interested in combining the predictive power of (black box) ma...

-

Lately I had to write a seminar paper for a class and I decided to overdo it. But let's start at the very beginning. Here is my evoluti...

-

Have you already used trees or random forests to model a relationship of a response and some covariates? Then you might like the condtional ...

Thanks for the great presentation! Now I have another modelling tool in my belt :)

ReplyDeleteI saw your post. Good job! Nice to see a comparison with other model approaches.

ReplyDeleteI found your seminar paper "Recursive partitioning by conditional inference" very helpful to understand Hothorn 2006.

ReplyDeleteBy the way, using ctree (eventually cforest) to analyze magneto-encephalogrphic data. Thank you very much for this. Will cite this in myt methods paper I'm writing.

Cheers,

Antoine Tremblay

This comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDelete