Neural networks can model the relationship between input variables and output variables. A neural networks is built of artificial neurons which are connected. For the start it's the best to look at the architecture of a single neuron.

They are motivated by the architecture and functionality of neuron cells, of which brains are made of. The neurons in the brain can receive multiple input signals, process them and fire a signal which again can be input to other neurons. The output is binary, so the signal can be fired (1) or not be fired (0) which depends on the input.

The artificial neuron has some inputs which we call \(x_1, x_2, ... x_p\). There can be an additional input \(x_0\), which is always set to \(1\) and is often referred to as bias. The inputs can be weighted with weights \(w_1, w_2, ..., w_p\) and \(w_0\) for the bias. With the input and the weights we can calculate the activation of the neuron \[ a_i = \sum_{k = 1}^p w_k x_ik + w_0 \].

The output of the neuron is a function of it's activation. Here we are free to choose whatever function we want to use. If our output shall be binary or in the intervall \([0, 1]\) a good choice is the logistic function.

So the calculated output for the neuron and the observation i is \[ o_i= \frac{1}{1 + exp(-a_i)}\]

Pretty straightforward, isn't it? If you know about logistic regression this might be already familiar to you.

Now you know about the basic structure. The next step is to "learn" the right weights for the input. Therefore you need a so called loss function which tells you how wrong you are with your predicted output. The loss function is a function of your calculated output \(o_i\) (which depends on your data \(x_i1, ..., x_ip\) and the weights) and of course on the true output \(y_i\). Your training data set is given, so the only variable part of the loss function are your weights \(w_k\). Low values of the loss function tell you, that you make an accurate prediction with your neuron.

One simple loss function would be the simple difference \(y_i - o_i \). A more sophisticated function is \(y_i ln(o_i) \cdot (1-y_i) ln(1-o_i) \), which is the negative log- Likelihood of your data, if you see \(o_i\) as the probability that the output is 1. So minimizing the negative log - Likelihood is the same as maximizing the Likelihood of your parameters given your training data set.

The first step for learning about neural networks is made! The next thing to look at is the gradient descent algorithm. This algorithm is a way to find weights, which minimize the loss function.

Have fun!

Subscribe to:

Post Comments (Atom)

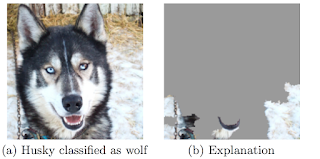

Explaining the decisions of machine learning algorithms

Being both statistician and machine learning practitioner, I have always been interested in combining the predictive power of (black box) ma...

-

Being both statistician and machine learning practitioner, I have always been interested in combining the predictive power of (black box) ma...

-

Have you already used trees or random forests to model a relationship of a response and some covariates? Then you might like the condtional ...

-

Lately I had to write a seminar paper for a class and I decided to overdo it. But let's start at the very beginning. Here is my evoluti...

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.