I am starting to take part at different competitions at kaggle and crowdanalytics. The goal of most competitions is to predict a certain outcome given some covariables. It is a lot of fun trying out different methods like random forests, boosted trees, gam boosting, elastic net and other models. Although I still feel like not being very good with my predictions, I already learned a lot.

As I am a student I have quite some time for these competitions, which is really great, but the time I can spend is nonetheless limited. So it is in my interest to work efficiently. At least the technical part like pre processing of the data or creation of the folder structure is often the same and can be done quickly. I rather want to spent most of the time learning new methods. In my first competitions I wasted some time with different technical things.

As an exemplary statistician I naturally use R. So the short code snippets are in R.

Here are my tops of how I wasted time:

1. Forget to remove the ID variable from your trainings data set.

Especially for random forests (with packages randomForest or party) this is a pain, because the ID variable has a lot of different values, so there are a lot of possible values to do the split for a decision tree and the variable will always have a high importance in your random forest. So just using

train[-which(names(train) == "ID")], where train is your training data set, helps out of this situation.

2. Do data preprocessing separately for test and training data set, because copy and paste of code was and always will be a good programming style.

This seems to be very obvious, but it can spare some time to do a call to

all <- rbind(train, test)

after reading in test and training data set. After binding both together you can impute your data, do variable transformation and so on. Afterwards you can simply make a subset from your dataset and assign all rows from all, which have NA in the response variable, to the test data frame and all with values different from NA to the training data set.

Maybe there is a reason (which I do not know yet)

why it should be done separatly, but as long as I do not know this reason, I

will do it like I described.

3. Do not check your predicted values for plausibility, but submit blindly

It is easy to make a technical mistake in fitting your model or making your prediction. A classical one is to forget to choose type = "response" when you, for example, have fitted a logistic regression model and want to predict your test data. In this case your prediction will be the linear predictor and the values will not make much sense. You can easily see with a quick summary() of your predicted values, if they are plausibel. In the logistic regression case you would recognize, that the values are not in the interval between 0 and 1. This can save you a precious submission slot. In some competitions you can make one submission per day and it is really annoying if you wasted one and it could have been easily avoided.

4. Don't save your final model, but only the predicted values

I find it useful to save every reasonable model you have fitted, random forest you have computed or regression you have boosted. Not only the predicted values are interesting. Especially for random forests or boosting methods the important/ chosen variables can be of major interest. So don't forget to save the model which maybe took some hours to calculate. Don't throw hours of computing time easily away.

5. An algorithm is very slow? Makes sure you run it with all variables and maximum possible iterations

This is a quite general remark. If you have a e.g. for-loop, which fits models and saves some values in, let's say a data frame and you have a little mistake in your code, which first throws an error at the very end of the procedure, you are going to have a bad time. It is better to test an algorithm with just a few iterations and/or a subset of variables at first to get a feeling of how much time it will take and to see if your code works as expected.

6. Don't document your models or anything, because you are just trying things out

I recently started to save each model with a talking filename (for example glmnet-alpha-0.1-lambda-0.01-reduced-data.RData), cleanly commented my R-Code and also gave my predicted values a talking filename. That was a huge improvement to my sloppy working style I had at the beginning, because it's much easier to keep track on what you already have done. Especially for longer lasting competitions the amount of different data sets, models, R-codes and submissions can become large. So it is good practice to have a consequent folder structure and give every file a self-explaining name. Even if you are just trying one thing out, take the time, write the piece of program properly and don't produce countless messy files.

These are just a few things which improved and speeded up my workflow. My future plan is to become friends with git and github.

I hope this post can be a help for other people, who are getting started with predictive statistics, to save some time.

If you have things to add to the list, please comment.

Subscribe to:

Post Comments (Atom)

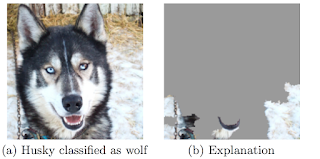

Explaining the decisions of machine learning algorithms

Being both statistician and machine learning practitioner, I have always been interested in combining the predictive power of (black box) ma...

-

Being both statistician and machine learning practitioner, I have always been interested in combining the predictive power of (black box) ma...

-

Lately I had to write a seminar paper for a class and I decided to overdo it. But let's start at the very beginning. Here is my evoluti...

-

Have you already used trees or random forests to model a relationship of a response and some covariates? Then you might like the condtional ...

Some advice on comment one: You can also use row.names(dat) <- dat$id; dat$id <- NULL. This way you keep the IDs but they don't affect your model.

ReplyDeleteThat's a very good solution. Thanks for that.

ReplyDeleteAlso there's a more general version of point 1, which is be wary of any variables who's distribution in the test set is significantly different from the training set.

ReplyDeleteThanks for taking part of CrowdANALYTIX competitions, and some interesting insights you made. We'll try to insure the ID variable is avoided in the datasets in future. Please let me know if you have queries about CA.

ReplyDeleteI remember reading a little debate about point 2; when you are going to do some feature engineering or imputation, by combing train and test, your features would contain some information about the test set (overfitting the test set).

ReplyDeleteThis is often regard as a necessary evil in order to get a good score for a competition, but it will probably not generalize well when you are implementing it in the real world.

Yes thats true. On the other hand the imputation becomes more stable, because you have more observations of the covariables.

ReplyDelete